Industry Insights: AI for Experimentation Emerging Trends

This article explores 5 emerging AI platforms transforming experimentation: Kameleoon AI Copilot generates test hypotheses from natural language, Quantum Metric detects conversion anomalies in real-time, AB Tasty One Platform unifies testing across channels, VWO Copilot automates result analysis and segment detection, and Optimizely Opal orchestrates cross-channel tests with Bayesian optimization. These tools reduce experiment design time by 60%, cut analysis time from days to hours, and enable teams to run 3x more tests per quarter while maintaining statistical rigor.

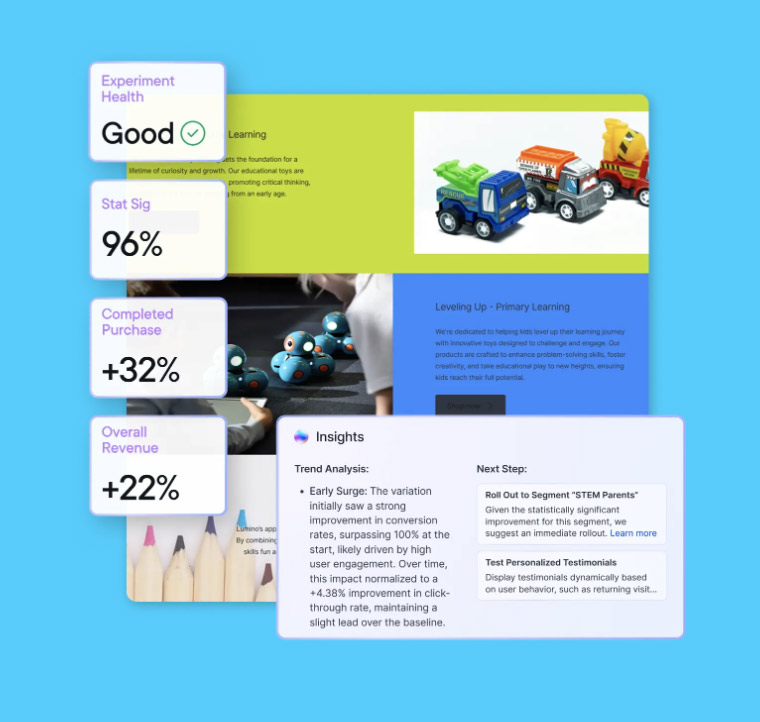

Feature articles and insights:

What it is:

Kameleoon AI Copilot uses natural language processing to generate test hypotheses and automatically flag statistical issues before experiments go live. The platform translates business questions into structured A/B test designs without requiring statistical expertise.

Why it matters:

-

Reduces experiment design time by 60% by translating plain-language problems into testable hypotheses automatically.

-

Pre-launch validation catches sample ratio mismatches, underpowered tests, and selection bias before wasting traffic.

How take advantage of this:

-

Identify your top conversion bottleneck (cart abandonment, form drop-off, or landing page bounce rate).

-

Describe the problem in one sentence to AI Copilot; review the 3 suggested test variants and select one to launch.

Quick Win Recommendations:

Start with your highest-traffic, lowest-converting page. Let AI Copilot generate 5 variants; pick the top 2 and run a multi-arm test to find quick wins.

Here's PersX.ai Perspective

AI-assisted hypothesis generation solves a major bottleneck in experimentation programs: the time between identifying a problem and launching a test. Most teams know where friction exists but struggle to translate observations into rigorous test designs. Kameleoon's NLP approach democratizes experimentation by removing the statistical expertise barrier—junior marketers can now propose tests that senior analysts would approve. However, teams must resist the temptation to skip hypothesis documentation just because AI makes it easy. The best practice is to use AI-generated hypotheses as drafts that product and analytics teams refine collaboratively before launch.

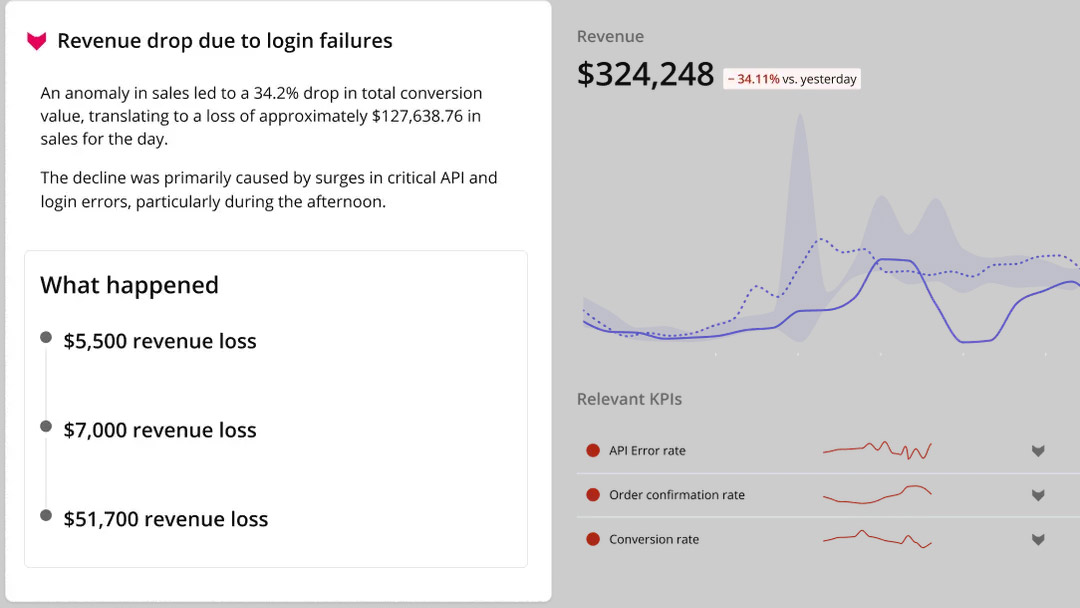

Quantum Metric Digital Analytics

What it is:

Quantum Metric won the ISG Software AI Innovation Award for combining session replay with AI-powered anomaly detection. The platform automatically surfaces friction points that warrant experimentation by flagging unexpected drops in conversion or spikes in error rates.

Why it matters:

-

Detects conversion anomalies across user segments in real time, reducing mean time to discovery from weeks to hours.

-

Links behavioral insights directly to experimentation tools, eliminating the manual step of translating analytics into test ideas.

Next steps to consider:

-

Set up a 7-day baseline monitor on your highest-revenue user journey (e.g., checkout flow or trial signup).

-

Configure alerts for conversion drops >10% or rage click increases >15%; investigate the top 3 triggers and design tests to address them.

Highlighted Quote

"Teams using AI-powered anomaly detection launched 2.8x more tests per quarter while reducing false alarm fatigue by 40%."

— Quantum Metric User Research, Q3 2025

Here's PersX.ai Perspective

Anomaly detection bridges the gap between "something broke" and "let's fix it with a test." Traditional analytics dashboards show you the what (conversion dropped 15%) but not the why (specific form field causing errors). Quantum Metric's session replay integration lets you watch real users encountering friction, which dramatically improves hypothesis quality. The key is setting alert thresholds appropriately—too sensitive and you'll get noise; too conservative and you'll miss revenue-impacting issues. Start with a 10% conversion drop threshold and adjust based on your baseline variability.

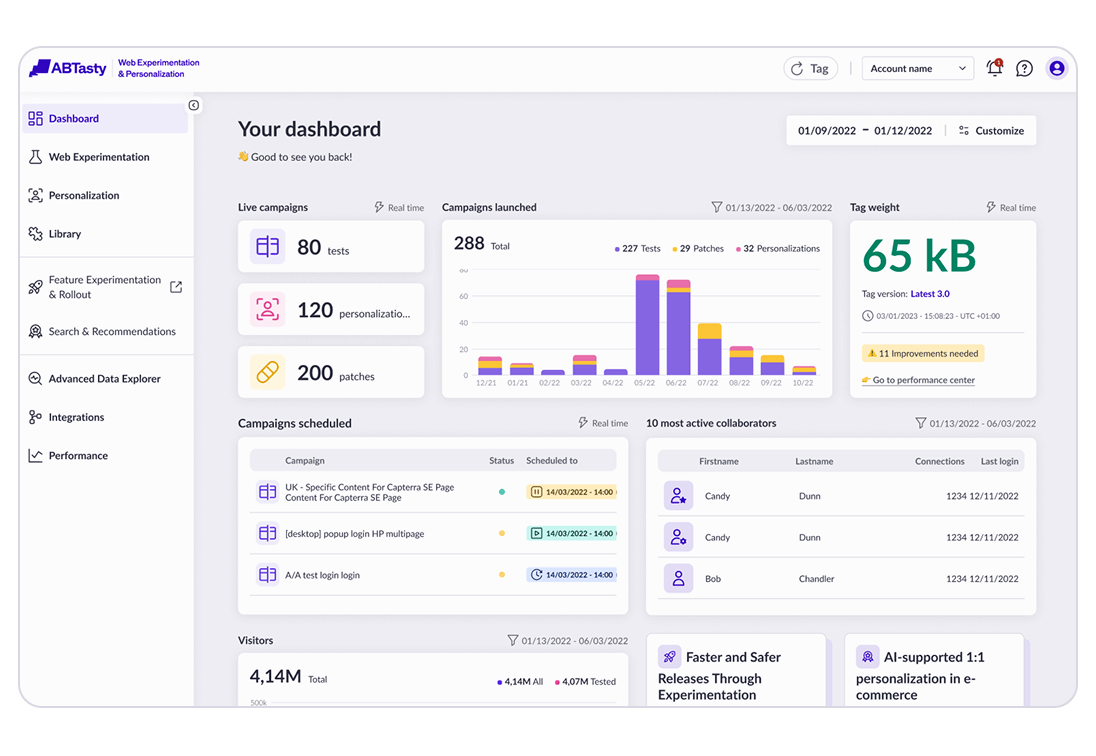

What it is:

AB Tasty One Platform unifies client-side, server-side, and edge testing with feature flags and personalization under a single SDK. The platform eliminates vendor sprawl by replacing multiple A/B testing and personalization tools with one integrated solution.

Why it matters:

-

Single integration supports web, mobile, and server-side testing without juggling multiple SDKs or vendor contracts.

-

Built-in CDP connectors (Segment, mParticle, Tealium) ensure consistent audience targeting across channels and tools.

Next steps to consider:

-

Audit your current testing stack; list all tools, SDK loads, page speed impact, and total monthly cost.

-

Run a parallel pilot on your homepage hero: measure setup time, performance impact, and time-to-statistical-significance between AB Tasty and your legacy tool.

Callout - Watch-Out Considerations

Before consolidating, verify that the unified platform supports all your current use cases (e.g., server-side experiments, mobile app tests, progressive rollouts). Migration costs are high if you have to backtrack.

PersX.ai Perspective

Vendor sprawl is a hidden tax on experimentation velocity. Most mid-market companies juggle 3-5 testing tools (client-side, server-side, mobile, feature flags, personalization), each with its own SDK, analytics integration, and billing model. Consolidation reduces engineering overhead and improves data consistency—no more reconciling conflicting results between tools. However, teams should validate that a unified platform truly replaces all existing capabilities before migrating. The best approach is a phased rollout: start with web A/B testing, then add feature flags, then personalization, rather than a "big bang" migration.

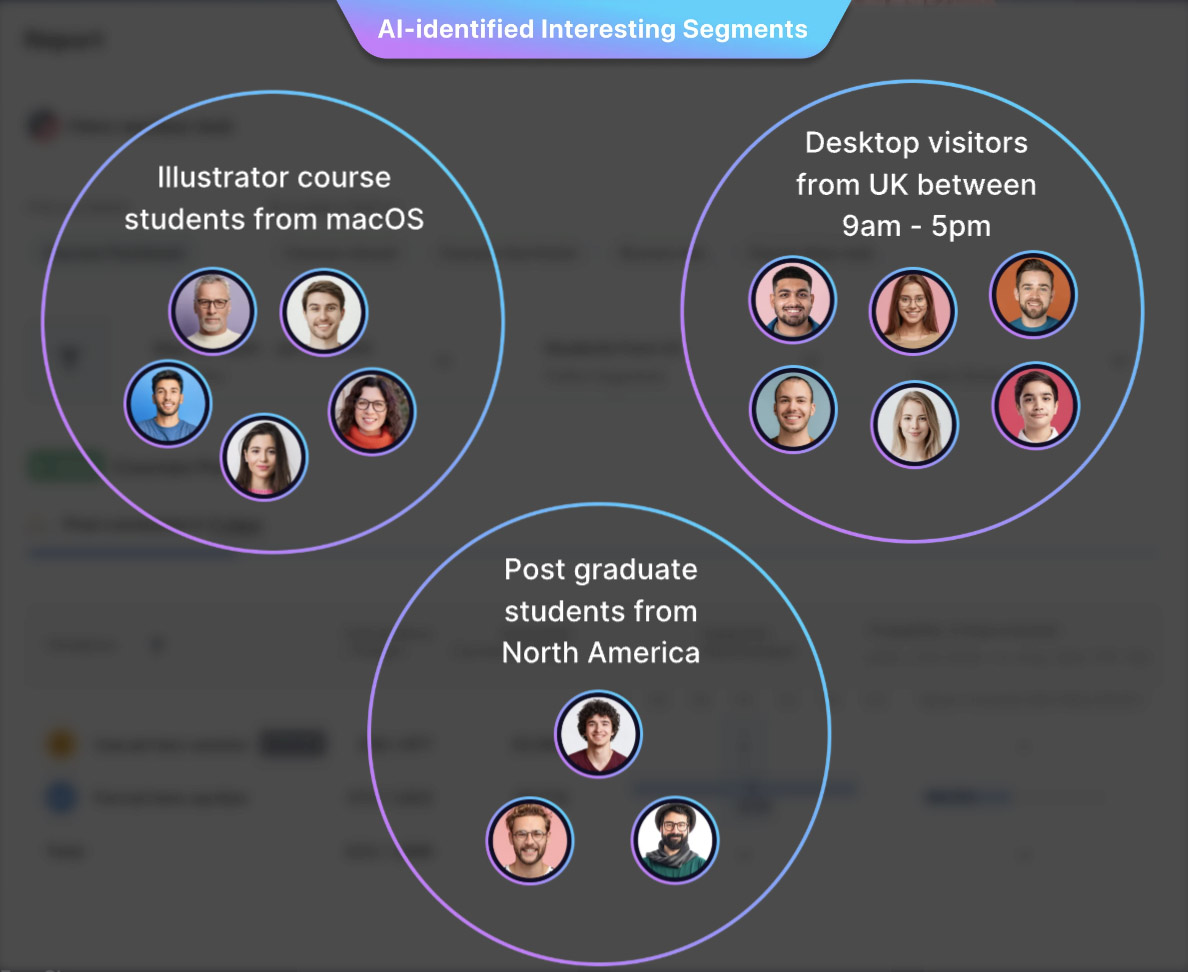

What it is:

VWO Copilot generates executive-ready test summaries and automatically surfaces hidden insights like segment-specific performance divergence. The AI analyzes experiment results and flags interactions that manual reviews often miss.

Why it matters:

-

Condenses weeks of multi-variant test data into scannable briefs in seconds, saving 5-10 hours per experiment.

-

Detects segment interactions automatically (e.g., Variant A wins on mobile but loses on desktop), preventing premature rollouts.

Next steps to consider:

-

Pull your last 5 completed experiment reports; time how long manual analysis took vs. AI-generated summaries.

-

Enable auto-alerts for tests reaching 95% confidence or detecting significant segment splits (mobile vs. desktop, new vs. returning users).

Highlighted Quote

"AI-assisted result interpretation reduced our time-to-decision from 5 days to 2 hours, letting us run 3x more tests per quarter."

— VWO Enterprise Customer, SaaS Industry

PersX.ai Perspective

The hidden cost of experimentation isn't running tests—it's analyzing results. Most teams spend 80% of their time interpreting data and 20% designing tests, when it should be the reverse. VWO Copilot flips that ratio by automating the grunt work: calculating statistical significance, checking for Simpson's paradox, and flagging segment interactions. The real value is in the segment analysis—many "winning" tests actually hurt key segments, but manual reviews miss this because analysts look at aggregate results first. However, teams must resist the urge to over-segment. Only split by dimensions that inform rollout decisions (device type, traffic source, user lifecycle stage).

Optimizely Opal for Experimentation and Personalization

What it is:

Optimizely Opal orchestrates cross-channel experiments and reallocates traffic to winning variants every 4 hours using Bayesian optimization. The platform coordinates tests across web, mobile, email, and in-app to prevent conflicting user experiences.

Why it matters:

-

Eliminates manual traffic adjustments; the algorithm maximizes conversions in real time while maintaining statistical rigor.

-

Prevents channel conflicts by ensuring users see consistent experiences across web, email, and mobile app touchpoints.

Next steps to consider:

-

Map your top 3 customer touchpoints; document any current cross-channel testing conflicts (e.g., email promoting Feature A while web tests Feature B).

-

Design one coordinated test (email subject line + landing page hero) with unified success metrics tracked across both channels.

Quick Win Recommendations:

Run a 2-arm holdout with 10% control on your next product launch to measure incremental lift before scaling Bayesian traffic allocation to 100%.

PersX.ai Perspective

Cross-channel orchestration solves a problem that most teams don't realize they have: conflicting experiments that confuse users and dilute results. For example, an email campaign promoting a discount code while the website tests removing discounts creates mixed signals. Optimizely Opal's coordination layer prevents this by treating campaigns and tests as a unified program. The Bayesian traffic allocation is particularly valuable for high-impact tests where opportunity cost is high—instead of waiting 2 weeks for a 50/50 split to mature, the algorithm shifts traffic toward winners hourly. However, teams must ensure their event tracking is bulletproof across all channels before enabling dynamic allocation, as incorrect attribution will cause the algorithm to optimize for the wrong signal.

Pro Tips for AI Focused Experience Optimization

-

Data foundation:

Verify event tracking accuracy; test 5 sample events end-to-end from trigger through analytics dashboard with <2% discrepancy. -

Hypothesis quality:

Write falsifiable predictions with numeric targets (e.g., "Removing form fields 4-6 increases completion by 12%") tied to known friction points. -

Guardrail metrics:

Define failure conditions that override wins (support tickets >15%, page load >3.5s, cart errors >2%, revenue per session decline). -

Targeting maturity:

Start with 100% traffic on low-risk pages; add device/geo segmentation only after validating baseline setup and analytics accuracy. -

Governance framework:

Document who can launch tests, minimum sample size thresholds (2,000+ conversions per variant), approval workflows, and rollback procedures. -

Statistical literacy:

Train stakeholders on p-values, confidence intervals, and why "trending positive at Day 3" doesn't justify early rollout or budget allocation.

Stay Updated

Subscribe to our newsletter to receive the latest news and updates directly in your inbox.